Deploying your ARM template with linked templates from your local machine

Any now and then you have to make some major changes to the ARM templates of the project you’re working from. While this isn’t hard to do, it can become quite a time-intensive if you have to wait for the build/deployment server to pick up the changes and the actual deployment itself.

A faster way to test your changes is by using PowerShell or the Azure CLI to deploy your templates and see what happens.

However, when using linked templates this can become quite troublesome as you need to specify an absolute URL where the templates can be found. At this moment in time, linked templates don’t support using a relative URL. While this issue currently is Under review, we still might want to test our templates today. So how to proceed?

Well, you will have to deploy your linked ARM templates to some (public) location on the internet. For your side projects, a GitHub repository might suffice, but for an actual commercial project, you might want to take on a different approach.

How to do this in Azure DevOps

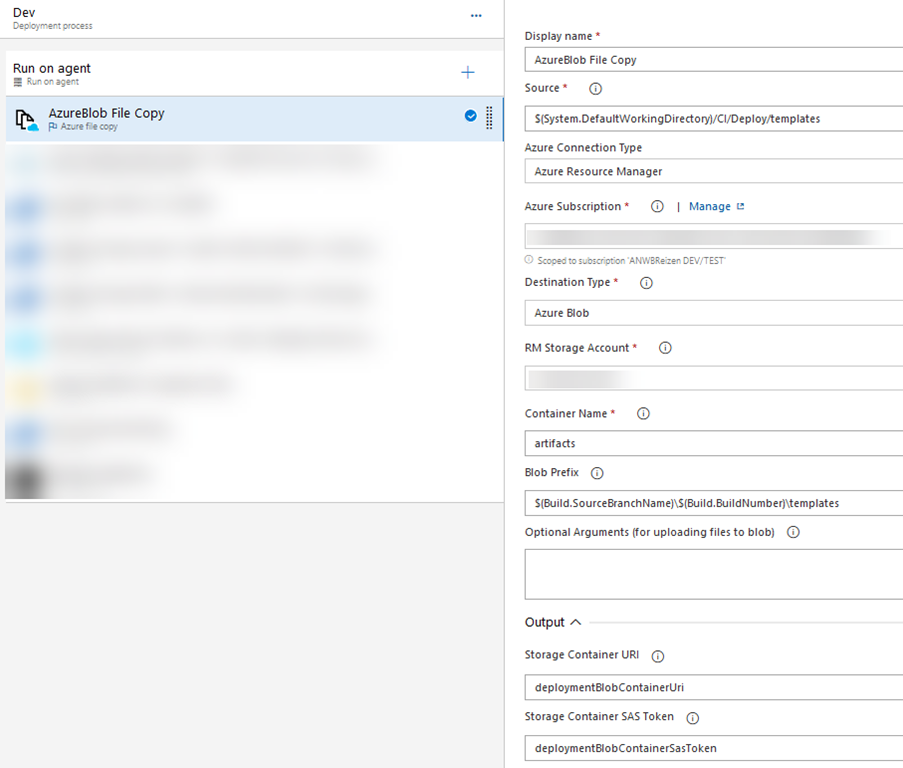

For one of the projects I’m working on, I’m using the Azure Blob File Copy step in the deployment pipeline to copy over all of the ARM templates to a container in a Storage Account.

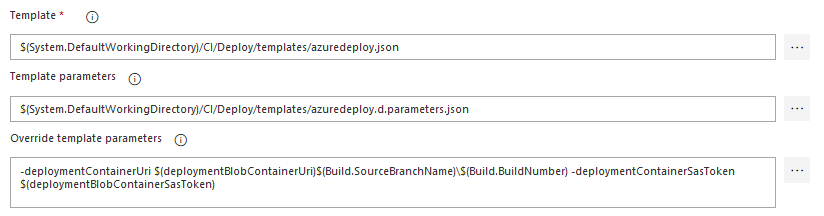

The actual location of the files and SAS token are stored inside two variables. Because of this, I can now use this information in the actual deployment step.

This works quite well when using the deployment pipeline in Azure DevOps.

However, as mentioned, you might want to test your templates BEFORE running an actual deployment (and potentially breaking the deployment).

How to do this locally?

When trying to deploy/test your templates from your local machine, you still have to put the linked templates to some public location. I recommend using a Storage Account for this, as this is also used in the actual deployment.

The first thing you need to do is copy the files to a container in the Storage Account. It probably makes a lot of sense to mark this container as Private. This way no one can access it unless this person/system has a valid SAS token.

Creating the SAS token

Next thing you need to do is create a SAS token. This token is needed to access (read) the linked templates.

If you’re familiar with the Azure CLI (which is a bit more user-friendly compared to Azure PowerShell), you might already know how to do this. The commands I’m using for this are the following.

$end=date -u -d "30 minutes" '+%Y-%m-%dT%H:%MZ'

$start=date '+%Y-%m-%dT00:00Z'

az storage container generate-sas `

--account-name "mydeploymentfiles" `

--account-key "[thePrimaryKey]" `

--name "artifacts" `

--start $start `

--expiry $end `

--permissions lr `

--output tsv

note: the above script uses the date function, which is installed via Git for Windows if you choose to add all Unix tools to your %PATH%. If you haven’t done this, you might to change this right now or think of an equivalent function in PowerShell

This will create a SAS token, which is valid for 30 minutes, necessary to access the files.

Deploying the templates

To deploy the ARM template from your local machine, you still have to specify the parameters you want to overwrite, the deploymentContainerSasToken and deploymentContainerUri in my example above.

There are multiple ways you can do this, I like to create a local parameters file for this and reference it in the deployment. This file will look similar to the following sample.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentParameters.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"deploymentContainerSasToken": {

"value": "?st=2019-11-19T00%3A00Z&se=2019-11-19T11%3A06Z&sp=rl&sv=2018-03-28&sr=c&sig=jAUxALRFTYL9t0Kuuh%2Bn/7By2eYk3eYYb3yAKZTFLrA%3D"

},

"deploymentContainerUri": {

"value": "https://mydeploymentfiles.blob.core.windows.net/artifacts/my-feature/0.1.0-my-feature.1+52"

}

}

}

Make sure to add the ? sign at the start of the SAS token. This value will be appended to the actual URL of the linked template and you want to have this token in the querystring.

You can also specify these parameters via the command line, but I don’t really like typing these long commands from inside a terminal. Using a text editor to do modifications feels a bit more robust, but you do whatever you like.

You will now be able to deploy your template using the linked templates referenced on the storage account.

az group deployment create --resource-group myResourceGroupName `

--template-file azuredeploy.json `

--parameters azuredeploy.d.parameters.json `

--parameters local.parameters.json

While the above stuff isn’t rocket science, I see a lot of people struggling with this so I hope this post helps a bit.