Publish link archive from Linkwarden with Python

There’s a new feature over here, my weekly links archive.

Every week a page will be added automatically based on content I have read and found interesting to share. I’m using a self-hosted Linkwarden instance to collect pages and links for a variety of topics. For the purpose of this weekly links archive I have created a new tag called Newsletter which I use to fetch the weekly links to share with all of you.

Of course, I don’t want to make my Linkwarden service open to the internet. To fetch the content from Linkwarden in my scheduled GitHub Action I use Tailscale as shared earlier on this blog.

Query Linkwarden API

Linkwarden provides a nice API that is documented (somewhat) on their site: https://docs.linkwarden.app/api/api-introduction

Not all features and possibilities are mentioned over here, but with some creativity you’ll be able to figure out a lot.

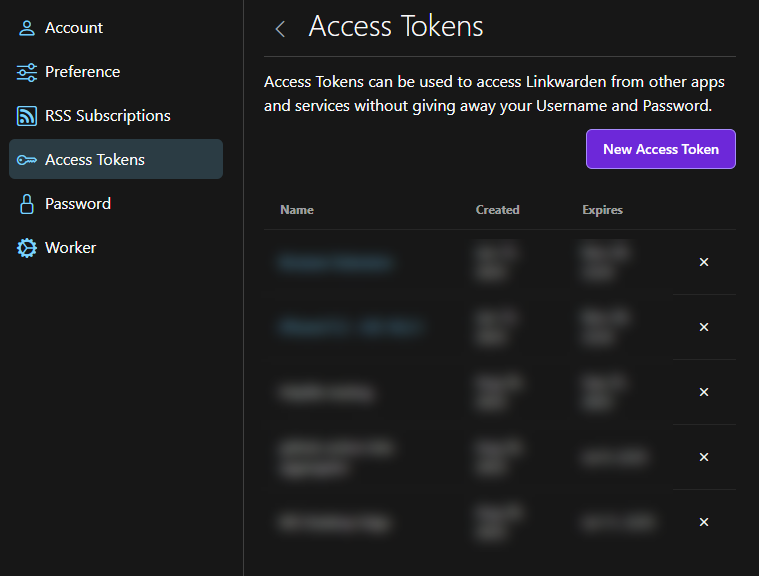

To use the API you first need an access token.

These tokens can be created on the Settings -> Access Tokens page.

Once you have this token, make sure to store it somewhere safe.

As you can see in the documentation there’s a search endpoint. This one is useful to validate your requests are working. The endpoint I’m most interested in is the links endpoint and using the tagId querystring parameter. To make good use of this, you do need to know which tag to query, in my case it’s 5.

Do note, this endpoint is being deprecated in favor of the search endpoint. At this point, I have not been able to figure out how to use the search-endpoint to retrieve all posts for a specific tag, but this will probably be necessary at some time in the future.

Some of the queries I’ve tested to validate my use-case is supported.

@protocol = http

@host = [yourInstance]:[port]

@tagToQuery = 5

# `linkwarden_accesstoken` is added to my VS Code environment variables of the REST Client extension

### Retrieve a list of links

GET {{protocol}}://{{host}}/api/v1/search?searchQueryString=rag

Content-Type: application/json

Authorization: Bearer {{linkwarden_accesstoken}}

### Retrieve a list of links with a specific tag

GET {{protocol}}://{{host}}/api/v1/links?tagId={{tagToQuery}}

Content-Type: application/json

Authorization: Bearer {{linkwarden_accesstoken}}

Output for the links endpoint looks like this.

{

"response": [

{

// Filtered properties for brevity

"id": 40,

"name": "AGENTS.md Emerges as Open Standard for AI Coding Agents - InfoQ",

"type": "url",

"description": "",

"url": "https://www.infoq.com/news/2025/08/agents-md/?utm_source=newsletter&utm_medium=email&utm_term=2025-09-02&utm_campaign=Global+AI+Weekly+-+Issue+113",

"textContent": " A new convention is emerging...",

"preview": "archives/preview/4/40.jpeg",

"createdAt": "2025-09-02T08:18:56.748Z",

"updatedAt": "2025-09-02T08:20:22.151Z",

"tags": [

{

"id": 5,

"name": "Newsletter"

}

],

"collection": {

"id": 4

},

"pinnedBy": []

},

// And many more links

{}

]

}

Query Linkwarden API via Python

I want to create my weekly links page automatically by scheduling a GitHub Action. For this, I have created a Python workload.

It’s not very complicated to translate the above to something in Python, but I’ll share mine anyway.

You can see a LinkWardenClient is defined with a method get_links_by_tag to retrieve all links for a provided tag id.

class LinkWardenClient:

def __init__(self, protocol: str, host: str, access_token: str):

self.base_url = f"{protocol}://{host}/api/v1"

self.headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {access_token}"

}

def get_links_by_tag(self, tag_id: int) -> List[Dict[str, Any]]:

"""Fetch links from LinkWarden API by tag ID"""

url = f"{self.base_url}/links?tagId={tag_id}"

try:

response = requests.get(url, headers=self.headers)

response.raise_for_status()

data = response.json()

return data.get('response', [])

except requests.exceptions.RequestException as e:

print(f"Error fetching links: {e}")

return []

except json.JSONDecodeError as e:

print(f"Error parsing JSON response: {e}")

return []

Usage is fairly straightforward.

This is copied from my Python script in which I’m specifying many values as input arguments.

client = LinkWardenClient(args.protocol, args.host, args.access_token)

all_links = client.get_links_by_tag(args.tag_id)

Filter the retrieved links

You might have notices, there is no start- or enddate you can specify when retrieving links. Filtering has to be done clientside.

This is handled via my LinkFilter.

As an input the entire collection of retrieved links are provided. From this collection it’s checking if the createdAt is 7 days or less in the past and returns the filtered collection.

class LinkFilter:

@staticmethod

def filter_last_week(links: List[Dict[str, Any]]) -> List[Dict[str, Any]]:

"""Filter links to only include those from the last week"""

one_week_ago = datetime.now() - timedelta(days=7)

filtered_links = []

for link in links:

try:

# Parse the createdAt timestamp

created_at = datetime.fromisoformat(link['createdAt'].replace('Z', '+00:00'))

# Convert to local timezone for comparison

created_at_local = created_at.replace(tzinfo=None)

if created_at_local >= one_week_ago:

filtered_links.append(link)

except (ValueError, KeyError) as e:

print(f"Error parsing date for link {link.get('name', 'Unknown')}: {e}")

continue

return filtered_links

Wrapping up

The shared Python code as shared above is the bread and butter to create a weekly archive.

If you are interested in doing something similar for your own (Hugo) blog, I’ve shared my complete Python file and GitHub Action workflow on a Gist over here: Create weekly link archive for Hugo blog.

The script is retrieving & filtering the links and creates new Markdown files (weekly & overview) with the links.

Do keep checking what I’m sharing every week and if you create something similar, let me know!